Visual perception and Neural representation of sensation

Our interest is how neural network in brain represents the sensation and preception, especially visual information. Below is a list of recommended textbooks for students or people who is interested in this field (Neuroscience, Vision science, Deep learning).

List of text books for Neuroscience, Vision Science, Deep learning for students

We currently study 3 research themes shown below.

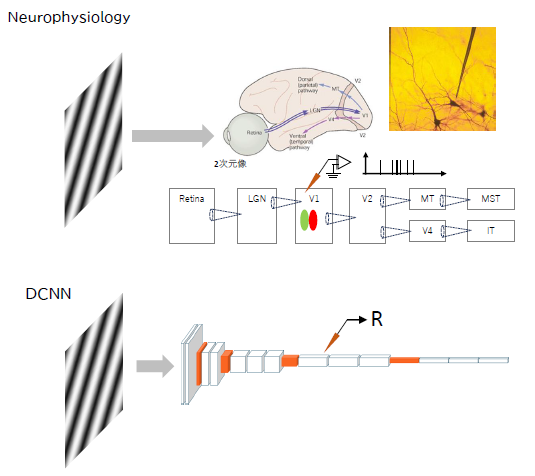

Representation of middle-layer of deep convolutional neural network

Deep Convolutional Neural Network (DCNN) is a feature extractor originally inspired by early findings in visual neuroscience field. Spatial feature of convolutional units in early layers of DCNN has similar trends to receptive field (RF) characteristics in of cortical neurons in area V1, which is the first stage of visual information processing in cerebral cortex. Both of these systems have spatial frequency filters in early stages, then these outputs are pooled across space to represent more abstract information by higher layer units.

Since image classification accuracy of DCNN is quite high as human, there might be common computational algorithms of information processing to represent object information in DCNN and cortical visual system. However, it is not clear how this ability is acquired and how the visual features are represented in the networks.

There is similar issue exists in the neuroscience field, so called “black-box problem”. This may be attributed to the complexity of the brain, in which there are more units and connection complexity (the human cerebral cortex has roughly about 16 billion neurons total and about 160 trillion synapses).

To understand representation of visual neurons, receptive field profile is typically measured in neurophysiological experiments. This method can be applied to artificial neural network to visualize internal processing of models. By measuring receptive field characteristics, we reveal what visual features each unit encodes and how these information are integrated.

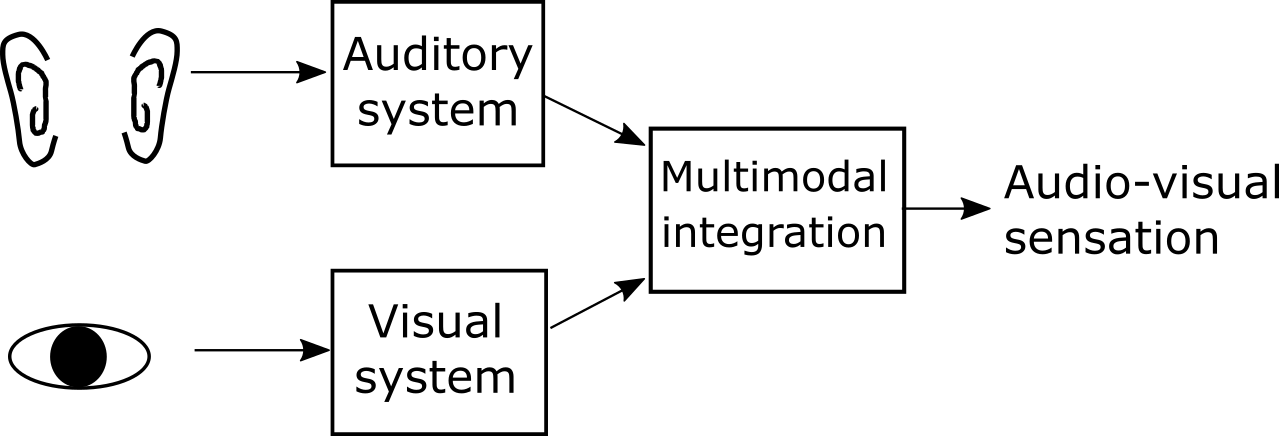

Neural mechanisms of mutimodal integration

Our neural system integrates multimodal sensory signals to estimate surrounding environment. Individual sensory inputs are processed in different brain areas then sent to multi-modal areas. Sensory cortical areas might also interact each other to enhance signals or suppress noise inputs to stabilize sensory state.

Multimodal integration process might be important to compensate lacked information or disambiguate equivocal situation. However, we sometimes can be confused by mismatched multisensory signals. When body is staying at same place but optic flow signal shows forward motion, it gives unusual sensation to us.

It has been reported by human psychophysics that subject perceive visual motion when auditory motion stimulus was presented while the subject observe static image. It indicates that incongruent motion conditions between two modalities might bias direction discrimination of subjects. Based on numerous neurophysiological studies, response bias of neural population in sensory area bias decision of subjects. Therefore, the bias of motion discrimination might be result of response bias of neural population of cortical neurons.

To understand neural basis of multimodal sensory integration, we first conduct psychophysical experiment using audio-visual motion stimulus. By manipulating motion parameters of audio-visual stimuli, we measure magnitude of bias that might correlate to audio-visual integration. In parallel, we measure EEG signal while subject performing psychophysical tasks. We analyze how psychophysical performance correlate with neural bias that might be caused by multi-modal sensory signal.

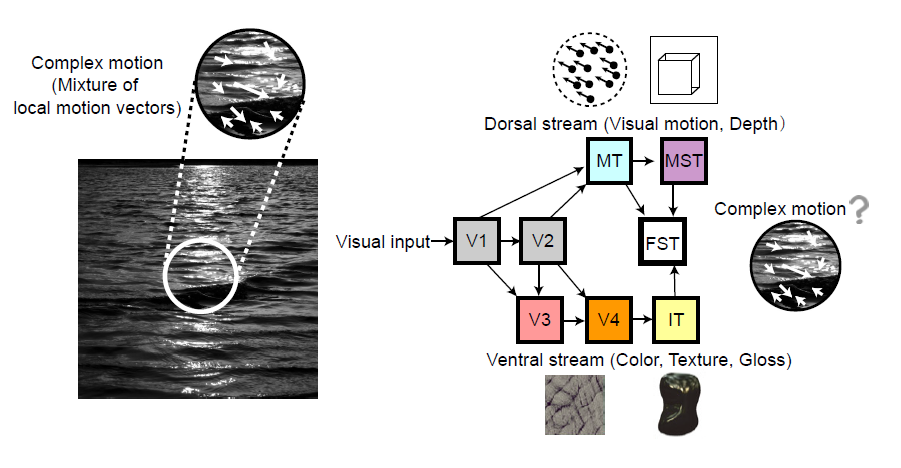

Neural mechanisms of complex motion perception / Representation of material perception "Shitsukan" defined by motion cue

Spatial distribution of visual motion in natural scene is typically non-uniform. For example, in liquid flows, various directions/speeds of motion vectors are spatially distributed in a complex manner, while we can easily discriminate liquid flows from noise motion just by the spatial feature of the motion flow.

Motion information is encoded in dorsal visual areas such as area MT. Since majority of MT neurons are robustly driven by uniform motion, in which the directions/speeds are uniform across space, spatial feature of motion might be represented elsewhere. We are interested in which cortical areas represent complex motion information.

Neurons in area FST are selective to spatial structure defined by motion (Mysore et al., 2010), therefore the area FST is one of candidate which represent spatial features of complex motion by integrating multiple direction components.

Recently, it was reported that the spatial smoothness of local motion vectors, characterized using the mean discrete Laplacian of motion vectors, correlated with rated liquidness impression by human psychophysics (Kawabe et al., 2015). By applying the higher-order motion statistics to physiological/psychophysical experiments, we examine which cortical visual stage represent the complex motion information.

In this research theme, we take several approaches to understand the neural mechanisms of complex motion. First approach is to measure neural activity from cortical visual areas, which has been done and currently collaborating with other labs. Another approach is to analyze visual feature that represent complex motion (i.e. liquid flow) from natural movie. Though human psychophysics already proposed motion statistics that highly correlate with the perceptual decision, we will analyze distribution of possible motion statistics from movies of natural scenes. In addition, artificial neural network model will be used to investigate visual feature that contribute to rate liquidness.